I have a photographic memory, and I’m a time-space synesthete. That means I can visualize, in photorealistic detail, basically any place I’ve ever been. I can also imagine nonexistent places and fly around them in my brain like I’m in a video game.

It’s a cool thing to be able to do for myself, and the ability to imagine a particular shot in advance is helpful in my career as a photographer. I’d love to share these mental images with others, but there’s a catch: I suck at drawing. I can imagine a place like the Cathedral of Notre Dame or the interior of my first apartment in realistic detail, but if I pick up a pen and try to draw what I’m seeing in my mind’s eye, it comes out looking like the cheerful, aimless scribbles of a two-year-old.

I was excited, then, to learn about an artificial intelligence system from researchers at Kyoto University that is able to do something remarkable: Leveraging breakthroughs in deep learning and generative networks, it can read the images a person sees in their mind’s eye and transform them into digital photographs with up to 99% accuracy.

The system works for images the person is seeing in front of their eyes and ones they’re imagining. Currently, the images are low resolution, and the subject needs to be inside an MRI machine for the system to work. But it points to an amazing possibility, and one I never expected to see within my own lifetime — as the tech improves and brain reading hardware gets better, computers will be able to scan our brains and transform our mental images into actual photos we can save and share. And this could arrive within a decade.

The Kyoto University researchers performed their experiments in 2018 and published the results in the journal PLOS Computational Biology in 2019. A 2018 report in Science Magazine details how the researchers’ system works. The researchers placed subjects into an fMRI scanner and recorded activity in their brains. Unlike a traditional MRI, an fMRI measures blood flow in the brain, allowing scientists to determine which brain regions are most active as a subject performs a task. While recording brain signals from the subjects’ visual systems, the researchers showed them thousands of images, displaying each image several times. That gave them a huge database of brain signals, with each set of signals corresponding to a specific image.

The researchers then fed all this data into a deep neural network (DNN), which they trained to produce images. Neural networks are fantastic pattern detectors, and for each photo shown to the test subjects, the researchers had the neural network attempt to produce an image matching the observed patterns of brain activity, refining its output more than 200 times. The end result was a system that could take in fMRI data showing a subject’s brain activity and paint a picture based on what it thought each subject was seeing.

The researchers then threw in a twist: They handed the DNN’s output to an already trained generative network. This type of network is relatively new and is one of the most exciting advances in A.I. to occur over the past decade. These specialized neural networks take in basic inputs and generate wholly new photos and videos, which can be remarkably realistic. Generative networks are the tech behind deepfakes, artificial people, and many Snapchat filters. In this case, the researchers used their generative network to normalize images read from their subjects’ brains and make them more photo-like.

The researchers’ final system took in brain activity data from subjects, turned it into crude photos with the DNN, and then used the generative network to polish those photos into something much more realistic. To test the system’s output, the researchers showed the images it generated to a set of human judges. The judges were also shown a collection of possible input images and asked to match the images read from subjects’ brains to the most similar input image.

More than 99% of the time, the judges matched the output image produced by the system to the actual input image the subject had been viewing. That’s a stunning result: Using only brain signals and A.I., the researchers reconstructed the images in subjects’ brains so well that neutral human judges could match them up to real-world images almost 100% of the time.

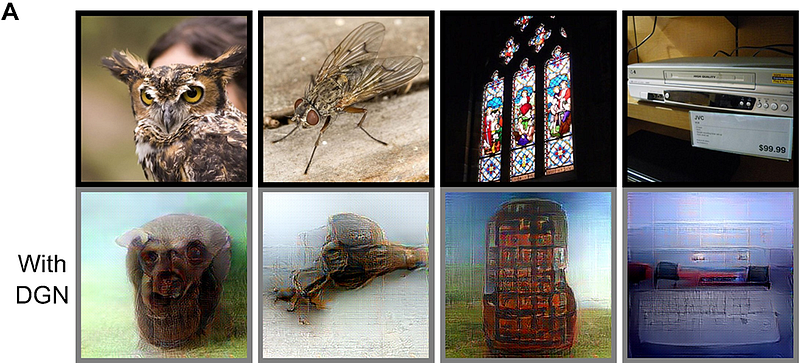

You can judge the results for yourself. The first row of images below shows the original photos shown to subjects in the experiment. The second row shows the reconstructed images built from their brain signals. They’re not perfect by any means, but they’re definitely recognizable.

The researchers then upped the ante. Instead of reading from the subject’s brains while they looked at an image, the researchers asked them to imagine an image they had seen before and hold it in their mind’s eye. Brain scans from this task again yielded usable images. (This makes sense, as imagining images uses many of the same brain regions as seeing actual images.)

The images constructed solely from imagination weren’t nearly as good as those created when the subjects were looking at an actual photo. But when subjects were asked to imagine simple, high-contrast shapes, like a plus sign or a circle on a blank background, and their brains were scanned, the resulting reconstructed images matched with real-world examples 83.2%— far better than chance.

The challenges of reading imaginary photos may have less to do with the researchers’ techniques and more to do with the subjects. Holding a single image in your mind for a long period of time is hard, and people vary considerably in how photorealistic their memory is. If the researchers trained subjects to visualize specific images — perhaps using techniques derived from meditation — or if they used synesthetes like me, who often have extremely vivid mental imagery (the technical term is hyperphantasia), they might get much better results.

At the moment, images derived from subjects’ neural signals are relatively crude. But then, so were the first digital photographs. The researchers’ results prove that it’s possible to read images from people’s brains. Now that the cat is out of the bag, there are a variety of ways that the technology could improve — and fast.

Firstly, the resolution of the input data could improve dramatically. The fMRI protocol used by the researchers yielded spatial resolution of around two millimeters. That’s not bad, but it still means that the researchers were aggregating the responses of about 100,000 neurons into each data point they measured. Brain implants like an experimental device from Elon Musk’s Neuralink promise to read from individual neurons, a resolution orders of magnitude higher than that of an fMRI.

This super-high resolution currently requires an invasive brain implant to achieve. It’s possible, though, that as deep neural networks improve, researchers will be able to get similar data using noninvasive, easy-to-wear brain interfaces like EEGs. Currently, EEGs have resolutions in the centimeter range, but new optical technologies improve upon this, and coupling EEGs with super-resolution neural networks improves their effective resolution dramatically. As EEGs and deep networks improve, software could potentially read brain images using a noninvasive consumer device mounted atop a user’s head, while still achieving better resolution than today’s fMRIs. Higher resolution would yield a more accurate look into a subject’s mind and make it easier for a computer to reconstruct their mental pictures.

The most promising development, though, isn’t on the input side — it’s in the generative networks that the researchers used to interpret brain data and turn it into actual pictures. Today’s best generative networks are incredibly powerful — they can take remarkably sparse inputs and yield realistic images. OpenAI’s Dall-E network, for example, can take a written prompt like “an armchair in the shape of an avocado” and create a photo of such an armchair that looks like it was created by a human designer.

If a network like Dall-E could be trained to use brain data instead of text as an input, it could likely take even the faintest patterns of brain activation and determine the exact mental picture that a person was holding in their mind’s eye. Doing this would require capturing a large amount of training data — asking people to imagine millions of different photos while recording their brain signals for the network to analyze. If this data gathering could be done — and generative networks continue to improve — it’s conceivable that a noninvasive consumer-level brain interface could read accurate pictures from your head within a decade.

If that happens, the implications would be massive. Artistic and design-based fields would transform dramatically. Imagine being able to think of your vision for the perfect kitchen (or the perfect user interface for your startup’s app) and have a computer transform your ideas into a realistic photo. You could hand off the photo to an architect or developer and have your dream space built to your exact, imagined specifications.

Photographers might no longer need cameras — we could simply take “mental pictures” of a scene and download them from our brains at our leisure. Product designers could create mockups of a new device in their brain, capture them, and turn them into actual models with a 3D printer. Directors could imagine a movie scene and then create storyboards from brain imagery, or even imagine and capture entire animated films.

Such a technology could be a huge benefit for human rights, too. Imagine a situation where the victim of a massacre or mass persecution could recall their experiences and have their memories turned into actual images, which could then be used to help bring perpetrators to justice. Questions about the veracity of the images would abound, but the ability to capture vivid images of crimes and injustices would have huge implications for the law.

There would also be a lot of potentially troubling military applications. Imagine using the technology during an interrogation to extract the faces of enemy agents from a prisoner’s mind without their consent. Or imagine an intelligence agency sending a spy to walk through a facility disguised as a tourist or contractor, and then downloading images of the facility from their brain later on and using them to create a detailed floor plan — the better to guide a military strike.

At a personal level, there are obvious privacy and security implications to a device designed to read images from your brain. These would likely become most alarming if the technology was combined with an always-on brain implant like Neuralink’s device, since the device could be reading images from your brain at any point without you knowing. Clunkier implementations of the tech would likely be more secure — if you had to put on a special cap (or slide into an fMRI) to have your brain read, it would at least be obvious when your thoughts were subject to recording.

One can still imagine awkward situations arising from a brain-reading machine. Think about being a subject in a lab experiment and being asked to imagine something innocuous, like a new type of cellphone, and instead imagining something embarrassing, like the principal investigator in their underwear. The white bear effect dictates that the harder you try not to picture something, the more likely it is that it will pop into your brain. Perhaps mind reading is best done in private.

As a person who already experiences the world in an extremely visual way, I can’t wait for these technologies to arrive. The researchers have released the code for their system on Github, so if you have a $5 million fMRI scanner at home, you can start reading minds right now. Otherwise, to play with the tech yourself, you’ll likely have to wait until someone pairs strong generative networks with consumer brain interfaces. Or, I suppose, you could cave and take a drawing class.

Originally written in 2021.