In 2020, I got the chance to Beta test OpenAI’s GPT-3, a remarkable system that can generate nearly any kind of text, using only a simple prompt.

Experimenting With GPT-3 Felt Like Witnessing a Technological Revolution

The potential for the new artificial intelligence program is nearly unfathomableonezero.medium.com

Enter “Write me a sea chanty about Joe Biden’s inauguration” into GPT-3, for example, and it will duly respond with exactly that.

At the time, OpenAI was also working on DALL-E. This new system is very much like GPT-3, except that it generates full images instead of just text. Give it a prompt like “An armchair in the shape of an avocado” and it will give you a bunch of illustrations of avocado armchairs, created out of thin air.

As a photographer and a person with a photographic memory, I knew I needed to test this system. I immediately emailed asking OpenAI for access, only to learn that DALL-E wouldn’t be ready to Beta test for quite a long time.

Fast forward two years, and that “long time” has passed. OpenAI officially began opening up DALL-E Beta testing to a very select group of researchers, artists, and experimenters. Yesterday, I learned that I was among that group, and received my invite to Beta test DALL-E.

Here’s what I did with it on my first day.https://www.youtube.com/embed/PF01_D7Tb1s?feature=oembed

Friendly Washing Machines

DALL-E excels at creating whimsical scenes that have never existed before, and perhaps could never exist in reality. That’s one of the fun things about using the platform — you can enter in basically any idea you can dream up, and DALL-E will generate a picture based on it.

I have a fancy AI-enabled washer and dryer from LG. The two appliances communicate with each other. If I wash something in the washer, it uses sensors to determine what I washed and communicates this to the dryer. The dryer then sets its temperature, speed, etc. to appropriately dry that kind of fabric.

I’ve always imagined my washer and dryer as friends. So I asked DALL-E to generate a photo of “A washer and dryer who are friends.” Here’s what it gave me.

I love this. For one thing, DALL-E determined that I was probably looking for an illustration since the scene I was asking for was clearly imaginary. The washer and dryer here look a bit personified, with controls that suggest faces. They’re clearly having a conversation. One looks sad, and the other appears to be comforting them. It’s good to see that they have such a positive relationship.

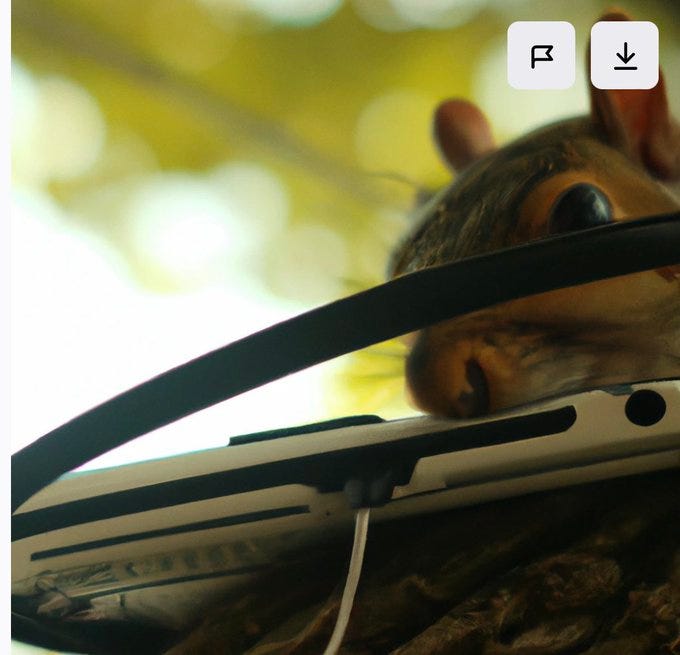

Internet-eating Squirrels

I knew that my former editor/boss Megan Morrone would have some good ideas for DALL-E prompts, so I asked her for one. Sure enough, she provided me with the gem: “A squirrel eating my Internet.”

Here’s what DALL-E did with that:

I love that it chose to make this photorealistic. The squirrel looks quite real. But I also like how it chose to depict “the Internet” (or at least, Megan’s internet). It’s kind of a mashup of an iPhone and a router, with a random cord, something that looks like a Wifi antenna, and a 1.5mm audio jack on the right.

The squirrel also appears to have taken the Internet up into a tree to eat it, since there’s bark in the foreground. If a squirrel were to eat the Internet, I assume it would indeed carry it to a safe spot in a tree first.

Images From My Memory

Those were fun, but I had a deeper and more significant idea I wanted to test. I’m a time-space synesthete, which means a couple of things. For one, I see time as a visual construct — kind of like a donut encircling my body. Like many other time-space synesthetes, I also have a photographic memory and can remember nearly any place I’ve been or thing I’ve seen in photorealistic detail.

This is very cool in many ways, but annoying because, while I can visualize nearly anything in my mind’s eye, I’m a terrible illustrator. I can call up a mental picture of nearly anything I’ve ever seen, but I lack the drawing skills to turn that mental picture into an image that I can actually share.

Ever since I heard about DALL-E, I wondered if it would allow me to take the images I can visualize in my mind’s eye and transform them into actual photos.

From my early testing, it appears to do exactly that.

To test this idea, I visualized a scene that I remembered seeing of my dog Lance sitting on a floral print settee in my house. I described the scene to DALL-E as “Photorealistic Bichon Frise sitting on wide floral settee by a window with a puppy cut.”

I knew it was unlikely to create the exact scene I had in my mind’s eye on its first try. But the cool thing with DALL-E is that for each prompt, it doesn’t just give you a single image — it gives you a bunch of them. This allowed me to tweak my prompt, scroll through its output, and hone the images it generated until they were as close as possible to the image in my mind’s eye.

Here’s what I ended up with.

I later realized that I hadn’t just seen Lance sitting on the settee — I had actually taken a snapshot of him sitting there. That provided me the chance to compare DALL-E’s output to the actual scene I was remembering.

Here’s the original photo I took, which is pretty close to the photo I had of the scene in my mind’s eye (I remember viewing Lance straight-on instead of from an angle, but otherwise this captures my mental image pretty well.)

DALL-E’s image isn’t the exact scene I remember. But it’s pretty darn close — right down to a very similar floral print on the settee. It’s certainly close enough to communicate the general feel and content of my mental image to an outside viewer.

I tried the same thing with a mental image of the golf course at the Ritz Carlton Half Moon Bay. Again, I’ve visited that course many times, and I have a strong visual image of it in my mind’s eye. I used a similar technique to the Bichon photo, writing and refining prompts and choosing between DALL-E’s output until I got very close to the image in my mind’s eye.

Here’s the result:

For this one, I don’t have a reference photo to look back at — the image I was basing this shot on is entirely in my mind’s eye. That’s even cooler to me, because the final image I generated with DALL-E captures pretty faithfully the Half Moon Bay scene I was imagining, yet it’s based totally on my own visual recollections.

I’m really looking forward to continuing to test DALL-E. I’m excited to see how it duplicates different artistic styles, and how it creates illustrations on par with human illustrators. But I’m also very excited to keep experimenting with how I can use it as a creative tool to turn the scenes in my mind’s eye into real images.

Stay tuned here as I share more from my testing.

Now, here’s a question for you:

What would you like to see DALL-E generate? Enter your prompt in a Response to this article and I’ll try to generate your image.